Wednesday, 30 January 2008

Official website: http://globalsystembuilder.com Download source from CodePlex: http://www.codeplex.com/gsb

Friday, 04 January 2008

I am working on a new user interface for my web-based IDE, called Global System Builder. It will use a 3D map-based user interface. So how does a 3D map-based user interface work with an Integrated Development Environment? You will see in the following posts. First step is the evaluation.

I lived in

Calgary, Alberta, Canada for quite a few years and know the downtown area quite well. One of its famous landmarks is the Calgary Tower. So I wanted to see how the two major (free) players compared to the real thing (above).

Check this out, on the left and Virtual Earth 3D on the right. Hmmm… interesting. Google Earth’s picture is a drawing. Now I have played with SketchUp and it is incredibly powerful to model 3D – including the interior of a building. However, not the case with the Calgary Tower here. You may want to look at the as they have a nice inventory of 3D objects.

Now Virtual Earth’s image on the right is interesting as the software used by MS to gather and make the 3D images got messed up a bit on the Calgary Tower. However, if you look past that and compare the background buildings in the images, Microsoft’s sure looks more realistic.

Microsoft has also acquired a 3D modeling tool like Google’s SketchUp called 3DVIA. It appears to have most of the same capabilities as SketchUp, but I need to spend more time with it. And similarly to Google’s warehouse, Microsoft has a way to place your models on Virtual Earth.

So how do the technologies compare? Well, Microsoft has opted for a web-based approach using a JavaScript control that you can play with live and see the source. Google Earth is a thick-client exe and its SDK is actually a with a C# .NET wrapper that you can get, which you will need a Subversion client like TortoiseSVN in order to get the source.

Having developed both, I must say that the Google thick client is much smoother and quicker than the Windows web-based client. Meaning that when you zoom in, rotate, pan, etc., and play around with the 3D features, Google Earth’s movements are much smoother and seem to download and render much quicker than Virtual Earth. Now this could be my internet connection speed to the data centers, the number of hops or … a whole bunch of things, but, it still leaves me with this impression.

Other than that, there a very specific feature in one product that is not in the other which will make the decision for me. Wonder what it is?

Finally, a company called 3Dconnexion has developed a 3D mouse called SpaceNavigator that can fly around and through these 3D worlds and models. I have one on order and can’t wait to try it out! If you want to see what it can do, have a look at this interesting “fly by:”

I did look at NASA’s World Wind and it is really impressive, but I have not had enough time to evaluate whether it meets my needs or not. One immediate short coming is the inability to draw and import a 3D model, or so it seems…

Still trying to figure out how a 3D map-based user interface will work with a programmers IDE? Stay tuned.

Wednesday, 26 September 2007

In Part 1, I briefly introduced the guide to the Software Engineering Book of Knowledge (SWEBOK) that was published in 2004. “The IEEE Computer Society establishes for the first time a baseline for the body of knowledge for the field of software engineering…” We will come back to the SWEBOK in a future post as this post is about how to qualify for “professional” league play.

In Part 1, I discussed software engineering as being a team sport. This is nothing new as far as I am concerned, but I am still amazed when a multi-million dollar software development project is assigned to, and executed by, a newly assembled team. This team has never played together, and quite likely consists of shinny players to NHL stars and everything in-between. Now imagine that this team is somehow “classified” as an amateur Junior “B” league hockey team and their very first game was to play a real NHL professional hockey team. What is the likelihood of the B hockey team winning? Don’t forget, the B team has not played together as a team yet. Their first game is to play together and play against an NHL pro hockey team. Did I mention that just before actual game play the team figures out that they are missing their Center, who also happens to be the Captain of the team. Again, what is the likelihood of success?

Of course, this hockey scenario would never happen in real life, but it is certainly happens in our software development world. Where teams (assembled or not) get assigned software development projects that are way beyond their software engineering capabilities to even have a chance at successful delivery. I see it everyday. And to varying degrees, I have seen it over the past 16 years of being in the software development business. I have been fortunate as some of the dev teams I have worked on are at “professional” league play, where all team members have +10 years of software development engineering experience. Aside from work being fun on theses teams, our success rate for the design and delivery of software on time and on budget was very high. Our motto was give us your biggest, baddest, dev project you got – c’mon, bring it on!

However, most teams I have worked on have been a mix of shinny players to pros. Our success rate was highly variable. And even more variable (chance has better odds) if the model is assembling players from the “resource pool.” Some of the shinny players have aspirations of becoming pros and take to the work, listening to the guidance of the pros. Other shinny players are finding out for the first time what it means to try out in the pro league and are questioning whether they are going to pursue their career in software development. It’s a hard road, being unindustrialized and all.

There are shinny players (and pro posers) that try real hard but simply don’t have the knowledge or skills (aptitude?) to play even at the shinny level, for whatever reason. This is especially difficult to deal with, if one or more of this type of player happens to be on your team. It is the equivalent of carrying a hockey player on your back in the game because s/he can’t skate. Never happens in the pro hockey league, but amazingly often in our software development world. If our software development world was more “visible”, you would see in every organization that develops software of any sort, some people wandering around carrying a person on their back. It would be kind of amusing to see, unless of course you were the one carrying… or being carried.

That is the irony of what we do as software developers. It is nowhere near as “visible” as a team (or even individual) sport where everyone can visibly see exactly what you are doing. And even if people could see what you are doing, it still may not matter, because to most people, other than software developers, software engineering is a complete mystery.

So how does one find out what level of league play you are at? One problem in our software development world is that we do not have levels of team play. We are not that mature (i.e. industrialized) yet. Well, some would say that the CMMI has levels of play and I would agree, but I am talking about individual level here first, then we will discuss what that means when you qualify to be on a team (or even the remote possibility of finding a team that has the same knowledge and skill level you do). Another way to determine your knowledge and skill level is through certifications. There are several ways to get certified. Some popular ones are: Certified Software Development Professional and the Open Group's IT Architect Certification Program. Other certifications are directly related to vendors’ products such as Microsoft’s Certified Architect and Sun's Certified Enterprise Architect.

For this series of articles, I am looking at level of play as being a “professional software engineer.” I firmly believe that software development is an engineering discipline and it appears that there is only one association, in the province I live in, that recognizes this and that is the Association of Professional Engineers and Geoscientists of BC (APEGBC). That designation is called a Professional Engineer or P.Eng. “The P.Eng., designation is a professional license, allowing you to practice engineering in the province or territory where it was granted. Only engineers licensed with APEGBC have a legal right to practice engineering in

British Columbia.” Of course, this may be slightly different depending on your geographic location.

Note how this is entirely different than any other certification – it is a professional license giving you a legal right to practice software engineering. “The term Professional Engineer and the phrase practice of professional engineering is legally defined and protected both in Canada and the US. The earmark that distinguishes a licensed/registered Professional Engineer is the authority to sign and seal or "stamp" engineering documents (reports, drawings, and calculations) for a study, estimate, design or analysis, thus taking legal responsibility for it.”

Ponder this for a while, what would it mean in your software development job right now if you were a professional software engineer and that you were legally responsible for the software that you designed? How would that change your job today? I know it would change mine.

All of this is not news to any practicing electrical, electronic and all of the various other engineering disciplines as this have been standard practice for years, even decades. Yet it is seemingly all new to us software professionals.

Let’s take a look at the requirements for applying for P.Eng., specifically related to software engineering. These two documents are: "2004 SOFTWARE ENGINEERING SYLLABUS and Checklist for Self-Evaluation” and “APEGBC Guide for the Presentation and Evaluation of Software Engineering Knowledge and Experience.”

From the Software Engineering Syllabus: “Nineteen engineering disciplines are included in the Examination Syllabi issued by the Canadian Engineering Qualifications Board (CEQB) of the Canadian Council of Professional Engineers (CCPE). Each discipline examination syllabus is divided into two examination categories, compulsory and elective. Candidates will be assigned examinations based on an assessment of their academic background. Examinations from discipline syllabi other than those specific to the candidate’s discipline may be assigned at the discretion of the constituent Association/Ordre. Before writing the discipline examinations, candidates must have passed, or have been exempted from, the Basic Studies Examinations.”

That’s right – exams. While I have 16 years of professional software experience, I may end up having to write exams. I wrote 17 exams when a postgraduate Software Engineering Management program, so what’s a few more. I say bring it on. Oh yeah, did I mention I was applying to become a professional software engineer? I want to work on teams near or at “professional” league play. Practice what you preach, eh? I will be documenting the process and my progress through this blog.

Open up the Software Engineering Syllabus PDF and have a look for yourself. Can you take your existing education and map it to the basic studies? How about that Digital Logic Circuits? Or how about discrete mathematics, remember your binomial theorem? Now look at Group A. Unless you are a very recent Comp Sci grad, it is unlikely you took Software Process – so perhaps exams for everyone! Who’s with me?!

While I am having a little fun here, no matter what, it is going to be work. And that’s the point, you don’t become a professional engineer overnight.

In addition to the educational requirements, have a look at the Presentation and Evaluation of Software Engineering Knowledge and Experience PDF. You need a minimum of 4 years of satisfactory software engineering experience in these 6 main software engineering capabilities:

- Software Requirements

- Software Design and Construction

- Software Process Engineering

- Software Quality

- Software Assets Management

- Management of Software Projects

Some capabilities are further decomposed into sub-capabilities. To each capability area can be associated:

- the fundamental knowledge or theory , indirectly, pointing to the model academic curriculum of Software Engineering,

- the applicable industry standards,

- the recognized industry practices and tools,

- and a level of mandatory or optional experience on real projects.

The document then defines these capabilities and sub-capabilities as to what they mean. Finally the document provides a suggested presentation of experience and an example of how to layout your projects. Seems pretty straight forward enough, but when you sit down and actually go through it, remembering the projects you worked on and what capabilities were used and referenced to the sub-capabilities, it can take a while.

While I won’t go through some of the details of the other requirements, which you can read in this 25 page application guide, two other items stand out, one is writing the Professional Practice Exam, in addition to attending the Law and Ethics Seminar and the other is references.

The Professional Practice Exam. Before being granted registration as a Professional Engineer, you must pass the Professional Practice Examination. The Professional Practice Examination is a 3-hour examination consisting of a 2-hour multiple-choice section and a 1-hour essay question. The examination tests your knowledge of Canadian professional practice, law and ethics. There is a (large) book reference that you need to study in order to prepare for the exam.

The reference form is interesting in the sense that the Association requires that Referees be Professional Engineers with first hand working knowledge of the applicant’s work and that the applicant must have been under suitable supervision throughout the qualifying period. I don’t know what your experience has been, but in my 16 years of being in the software development business, I have only worked with two professional engineers.

One more specific aspect I would like to point out is the APEGBC Code of Ethics. The purpose of the Code of Ethics is to give general statements of the principles of ethical conduct in order that Professional Engineers may fulfill their duty to the public, to the profession and their fellow members. There are 10 specific tenets and while I understand and appreciate each one, there is one in particular that is very apt to the state of software engineering today and that is:

(8) present clearly to employers and clients the possible consequences if professional decisions or judgments are overruled or disregarded;

You know what I mean.

This sums up the application process for becoming a professional software engineer. As you can see it is considerable effort and can take 3 to 6 months to acquire your license. However, the main benefit is that it tells employers that they can depend on your proven skills and professionalism. The main benefit for me is to use it as a qualifier for any new software development teams I will join in the future. My goal is to work on teams that are at the “NHL” level of play.

In Post 3 we are going to dive into the SWEBOK for an explanation of what the guide to Software Engineering Body of Knowledge is and how through this knowledge and practice, we as software professionals, can assist in the industrialization of software.

Thursday, 13 September 2007

“In spite of millions of software professionals worldwide and the ubiquitous presence of software in our society, software engineering has only recently reached the status of a legitimate engineering discipline and a recognized profession.”

Software Engineering Body of Knowledge (SWEBOK) 2004.

“Software industrialization will occur when software engineering reaches critical mass in our classrooms and workplace worldwide as standard operating procedure for the development, operation and maintenance of software.”

Mitch Barnett 2007.

I had the good fortune to have been taught software engineering by a few folks from

Motorola University early in my career (1994 – 96). One of the instructors, Karl Williams, said to us on our first day of class, “we have made 30 years of software engineering mistakes which makes for a good body of knowledge to learn from.” He wasn’t kidding. A lot of interesting stories were told over those two years, each of which had an alternate ending once software engineering was applied.

Over 16 years, I have worked in many different software organizations, some categorized as start-ups, others as multi-nationals like Eastman Kodak and Motorola, and a few in-between. I have performed virtually every role imaginable in the software development industry: Business Analyst, Systems Analyst, Designer, Programmer, Developer, Tester, Software/Systems/Technical Architect, Project/Program/Product Manager, QA Manager, Team Lead, Director, Consultant, Contractor, and even President of my own software development company with a staff of 25 people. These roles encompassed several practice areas including, product development, R&D, maintenance and professional services.

Why am I telling you this? Well, you might consider me to be “one” representative sample in the world of software engineering because of my varied roles, practices areas and industries that I have been in. For example, in one of the large corporations I worked in, when a multi-million dollar software project gets cancelled, for whatever reason, it does not really impact you. However, if you happen to be the owner of the company, like I have been, and a software project of any size gets cancelled, it directly affects you, right in the pocket book.

I wrote this series on software engineering to assist people in our industry on what the real world practice of software engineering is, how it might be pragmatically applied in the context of your day to day work, and if possible in your geographical area, how to become a licensed professional software engineer. Whether you are a seasoned pro or a newbie, hopefully this series will shed some light on what real world software engineering is from an “in the trenches” perspective."

One interesting aspect of real world software engineering is trying to determine the knowledge and skill of the team you are on or maybe joining in the near future if you are looking for work. While there are various “maturity models” to assist in the evaluation process, currently only a small percentage of organizations use these models and even fewer have been assessed.

Did I mention that software engineering is a team sport? Sure you can get a lot done as “the loner”, and I have been contracted like that on occasion. I am also a team of one on my pet open source project. However, in most cases you already are, or will be part of a team. From a software engineering perspective, how mature is the team? How would you tell? I mean for sure. And why would you want to know?

Software engineering knowledge and skill levels are what I am talking about. Software engineering is primarily a team sport, so let’s use a sports team analogy, and since I am from Canada, it’s got to be hockey. A real “amateur” hockey team may be termed “pick up” hockey or as we call it in Canada, “shinny." This is where the temperature has dropped so low that the snow on the streets literally turns to ice – perfect for hockey. All you need are what look like hockey sticks, a makeshift goal and a sponge puck. I can tell you that the sponge puck starts out nice and soft at room temperature, but turns into real hard puck after a few minutes of play. The first lesson you learn as a potential hockey star is to wear “safety” equipment for the next game.

Pick-up hockey is completely ad-hoc with minimal rules and constraints. At the other end of the spectrum, where the knowledge and skill level is near maximum maturity level, is the NHL professional hockey team. The team members have been playing their roles (read: positions) for many years and have knowledge and skills that represent the top of their profession.

How does one become a professional hockey player? It usually starts with that game of shinny and over the years you progress through various leagues/levels until at some point in your growth, it is decided that you want to become a professional hockey player. Oh yes, I know a little bit about talent (having lived the Gretsky years in Edmonton), luck of the draw, the level of competition and sacrifices required. The point being it is pretty “obvious” when you join a hockey team at what knowledge and skill level the team is at. And if you don’t, it becomes pretty apparent on ice in about 10 minutes as to whether you are on the right team or not – from both your and the teams perspective.

In the world of software engineering, it is not obvious at all if you are on the right team or not and may take a few months or longer to figure that out. Why? Unlike play on the ice where anyone can see you skate, stick handle and score, it is very difficult for someone to observe you think, problem solve, design, code and deliver quality software on time, on budget. This goes for your teammates as well as the evaluation of… you.

Software engineering is much more complicated than hockey for many different reasons, one of them being that the playing field is not in the self contained physical world of the hockey rink. The number of “objects” from a complexity point of view is very limited in hockey, in fact, not much more complex than when you first started out playing shinny, other than the realization for wearing safety gear, a small rule booklet and a hockey rink.

The world of software engineering is quite a bit more complex and variable, particularly in the knowledge and skills of the team players. It is more likely that your team has a shinny player or two, a NHL player and probably a mix of everything in-between. Without levels or leagues to progress through, it is actually more than likely, it is probably fact that the team is comprised of members at all different levels of software engineering knowledge and skill. Why is this not a good thing?

This knowledge and skills mix is further exacerbated by the popularity of “resource pools” that organizations favor these days. The idea is to assemble a team for whatever project comes up next with resources that are available from the pool. As any coach will tell you, at any level of league play, you cannot assemble a team overnight and expect them to win their first game or play like a team that has been playing together for seasons of play. This approach just compounds the fact of a mixed skill level team by just throwing them together and expecting a successful delivery of the software project on their very first try. We have no equivalent to “try outs” or training camps.

And that’s a big issue. People like Watts Humphrey, who was instrumental in developing the Software Engineering Institutes, Capability Maturity Model realized this and developed the Personal Software Process. Before you can play on a team, there is some expectation that the knowledge and skill levels are at a certain point where team play can actually occur with some certainty of what the outcome is going to be. I have a lot of respect for Watts.

So how do we assess our software engineering knowledge and skills? In part, that is what the guide to the SWEBOK is about. It identifies the knowledge areas and provides guidance to book references that covers those knowledge areas for what is accepted as general practice for software engineering.

The other assessment part is to compare our knowledge and skills to what is required to becoming a licensed professional engineer (P.Eng.) We will do this first before we look at SWEBOK in detail.

I see licensing professional software engineers as a crucial step towards software industrialization. I base this on my “in the trenches” experience in the electronics world prior to moving into the software development world. The professional electronics engineers I worked with had a very similar “body of knowledge”, except in electronics engineering, that was required to be understood and practiced for 4 years in order to even qualify to become a P. Eng.

Most importantly, the process of developing an R&D electronics product and preparing for mass production, which I participated in for two years a long long time ago, was simply standard operating procedure. There was never any question or argument as to what the deliverables were at each phase of the project, who had to sign them off, how the product got certified, why did we design this way, etc. Comparatively speaking to our software industry, that’s what the guide to the Software Engineering Body of Knowledge is all about, a normative standard operating procedure for the development, operation and maintenance of software. Yes, it is an emerging discipline, yes, it has limitations, but a good place to start don’t you think?

In Part 2, we are going to look at the requirements in British Columbia for becoming a licensed software engineer. We will use these requirements to assess the knowledge and skill level to uncover any gaps that might need to be filled in the way of exams or experience. If you want to have a look ahead, you can review the basic requirements for becoming licensed as a P. Eng., and the specific educational requirements and experience requirements for becoming a professional software engineer.

PS. Part 2 posted

PPS. Happy Unofficial Programmer's Day!

Friday, 03 August 2007

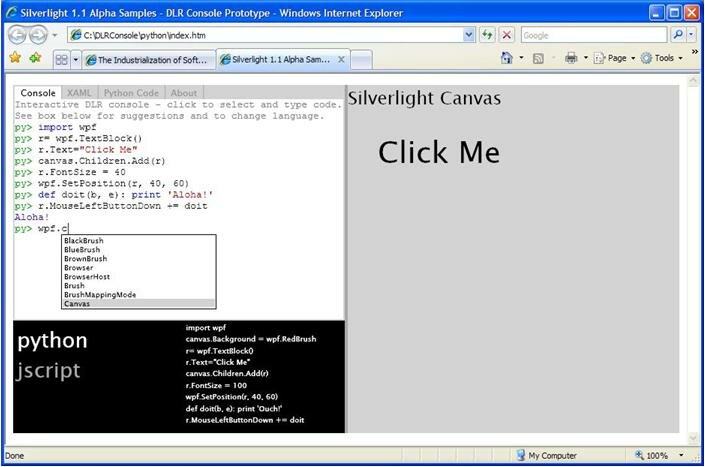

In part 1, I introduced a distributed programming environment called Global System Builder (GSB). The IDE is an ASP.NET web application. Another part of GSB is a Winforms application that runs on a remote computer that hosts an IronPython engine and communicates back to the web server using Windows Communication Foundation (WCF). Hosted in the web browser is a source code editor with syntax highlighting and an interactive console – both of which communicate with the IronPython engine on the remote computer to execute Python code (and soon to be IronRuby using the DLR).

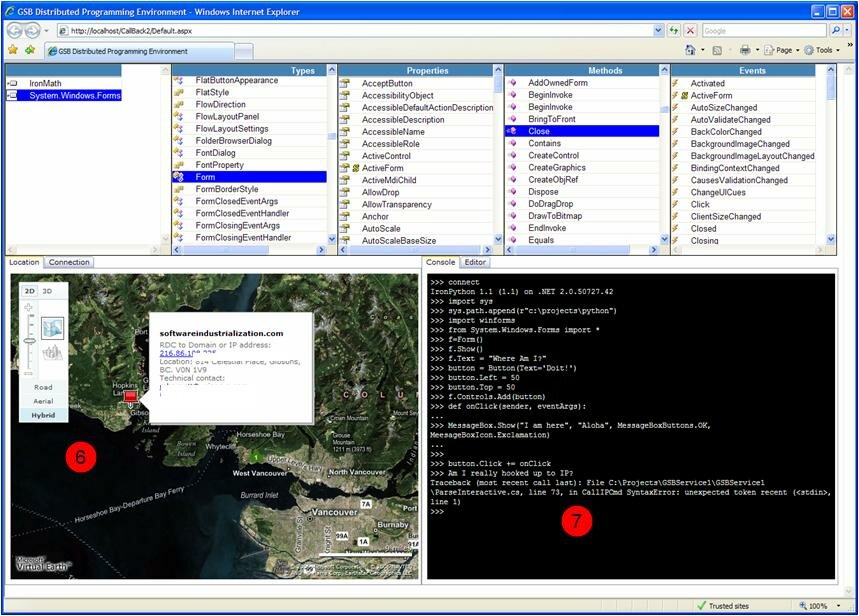

Also hosted in the web browser is a Virtual Earth control for locating remote computers, a Remote Desktop control to host a user session to the remote computer and a Smalltalk-like System or Class Browser for browsing classes (i.e. Types), member info, etc., that were loaded in the Winform app (i.e. GSB Service) on the remote computer.

One of the features I am implementing is the capability to extract code comments from any assembly that was loaded into the System Browser via the remote service (i.e. the Winforms application on the remote computer). The idea is that as assemblies are loaded into the remote service, WCF communicates with the ASP.NET web server by passing up reflected assembly information (i.e. TypeInfo, MemberInfo, etc., using System.Reflection) that is then bound to a custom GridView control in the web browser, including code comments.

I implemented an ASP.NET AJAX hover menu that will display context sensitive menu items depending in which grid you are hovering over. These menu items are helper functions (almost like macros) that will add the necessary IronPython (or IronRuby) code snippet to the console or code editor window, for example, to add an assembly references, stub out methods, insert DSL, etc. For this post, I am discussing a way to view code comments that are part of an assembly, which when clicked in the hover menu, will display a panel with the code comment (plus parameter info, etc.) for the item hovered over, depending whether it is a type, method, prop, etc. Think of it as a web-based version of Lutz Roeder’s cool Reflector, but in a Smalltalk like System Browser.

As an aside, I have one (major) design issue with the way assemblies are packaged out of Visual Studio as there is no way to include the code comments that you meticulously put in your source code files into the actual assembly itself. It is packaged as a separate XML file. In my mind, packaging the code comments in a separate file simply breaks the cardinal rule of encapsulation. I wish Visual Studio at least gave you the option to embed your code comments directly into the assembly itself.

OK, so how to get at the code comments? Searching through my vast libraries of reusable open source code on the internet, I came across this post and a nicely packaged class called XMLComments. Only takes one line of code to implement and retrieve code comments. Perfect. Thanks Stephen Toub!

I used System.Windows.Forms.dll as the guinea pig as it is a whopping 5 megs in size and so is the companion XML code documentation file. Everything seems to work ok, but a few observations. It seems even in Microsoft’s own code documentation, only 50% of the +2200 Types in the assembly have a code comment. Also, what about assemblies that don’t have the companion XML file and therefore no code comments at all?

There are a couple of choices, one is to extend the XMLComments class to derive some form of comments. Ultimately, I would like to be able to build comments into the assembly (separate XML file or assembly) by using GSB’s source code editor and the necessary comments syntax to inject or attach comments to existing assemblies that don’t have code comments (loaded into the System Browser).

[Update August 14, 2007 I recently came across, “Documenting .NET Assemblies” by Robert Chartier (Thanks Robert!) that does a really nice and fast job of documenting assemblies that… have no documentation. Exactly what I was looking for. Robert has done a really nice job here, and has given me a few ideas to rework the System.Reflection code I already use to pull Assembly Names, Types, Member Info, etc.]

How important is this feature to the project? Admittedly it is not a core feature, but at the same time, the point of the System Browser is to browse assemblies for reuse, or more importantly, for programming at a higher level of abstraction, so having code comments that came with the assembly or being able to attach your own code comments to the existing assembly, would be a good thing. In my previous life in the electronics R&D industry, getting a component, like an IC, without the accompanying documentation with the package renders the component useless. Looking at the Types list in the screenshot above, what do you think AmbientValueAttribute does? Not sure what to make of our software development industry where source code documentation is either non-existent or in a form or place that is makes it virtually unusable.

Reusing assemblies to program at a higher level of abstraction is another topic and several posts in of itself. There seems to be a lot of issues (angst?) in our industry about reusing other people’s source code or assemblies. I do it all the time, both professionally (aka my day job) and in my little hobby here. In fact the majority of GSB reuses several source code libraries and assemblies. Without the many people that have taken the trouble to build the open source components that make up this application (aside from my own code), I would never be in the position I am today with a working application.

Anyway, I digress. Displaying code comments is one feature of a growing list of features I collected after presenting GSB to a few potential users (i.e. dynamic programmers). Based on the growing feature list, I still have a long way to go – not the 90% complete I alluded to in my previous post. Also, I have decided to release GSB as open source when the DLR (with IronPython, IronRuby, Dynamic VB and Managed JScript) reaches RTM. This is going to take a little bit of architectural refactoring (mostly the hosting namespace) to plug in the DLR engine and provide multi dynamic language support much like seen in this excellent video with John Lam and Jim Hugunin. All good though as I have nothing but time, yes

January 30, 2008 Update - Global System Builder is available for download at: http://www.codeplex.com/gsb/

Official web site: http://globalsystembuilder.com

Wednesday, 11 July 2007

Oxymoron? Could be, but all too real in the world of software start-ups in my opinion. The following is a short story providing some lessons learned to software developers interested in starting up their own company. Why? I wish I had this advice when I started my own company in 2001, but it seemed difficult to find any material, specifically targeted for software developers that think they have a good product idea and want to bring it to market.

First let’s talk about product success. My team and I created a product called Bridgewerx that used BizTalk Server as its integration engine. BizTalk Server is a middleware product from Microsoft. Whether you call it an Enterprise Service Bus or Internet Service Bus or Internet Data Router (which we called our product back in 2002 when no such term existed) or a SOA “type” product, or delivered as an SaaS or packaged as an Integration Appliance or… really does not matter cause these are mostly marketing words that basically mean the same thing: loosely-coupled, message-based application integration server. Purists will disagree, but I can make BizTalk meet any of these terms.

A saying is practice makes perfect. When you perform similar tasks over and over again, generally speaking, you get good at it. As a Systems Integrator, we designed and delivered over 30 application integration solutions to our customers over a 4 year time span. We thought we were pretty smart about it, generalizing components and reusing them for the next job. In fact we got so “practiced” that we were more or less assembling reusable components and the only customizations were customer specific adapters, business rules, schemas and maps. Regardless of the type of application integration work we did, these patterns occurred every time. We became experts at developing BizTalk solutions using several design patterns and practices we had discovered and refined over this 4 year time period.

Then we thought, we could turn this into a product. We could take our design patterns, best practices and embody them into a graphical design time tool that allows Business Analysts to specify application integrations. The tool would capture all of the data (and metadata) necessary to fully describe an application integration scenario (even complicated ones). The output of the designer tool would populate and configure a Visual Studio solution with several projects that contained source artifacts from our library of reusable components, (e.g. Orchestration patterns similar to C# generics, runtime message envelope, utilities, etc.), plus user defined schemas, maps, business rules and even custom assemblies.

Through scripting, we automated the build and packaging process so the final deliverable was a MSI that a customer could install on their target infrastructure, run the installer and voila, a completed application integration project, now ready for operation.

Anyone that has been involved in any size application integration project knows how difficult it is. We called ourselves plumbers because it was a dirty and complicated job. Application integration inherently deals mostly with distributed systems, not just a single application, therefore complexity goes up, along with the myriad of file formats per application integration. If you have been there, you know what I am talking about.

So what? Well, our product code generates “complete” application integration solutions from a design time tool output specification. That’s the one sentence description. This means the amount of highly skilled, intensive manual labor effort in designing and coding the solution is dramatically reduced. At least by a factor of 10X, in some cases by 100X, and in the extreme cases where you are talking about very large scale application integration with +100 points of integration, it is not even feasible using traditional (i.e. manual labor) techniques.

We believe this to mean product success. It successfully answers a difficult problem in our software development world in the domain of application integrations. It provides at least a 10X improvement over current methods and tools.

If you want to read more about the design of the product, you can click on the Bridgewerx category on the right side of my blog. If you want to see a one hour movie demonstrating Bridgewerx, sponsored by Microsoft (thanks Jim!), you can see it here. Finally, if you want to see a live demo, contact me at my email address at the bottom of the page.

Forrester research says that “Application Integration is the top priority for 2007.” So we should be all set for business success, yes? No.

Business failure. As the President of the company for 4 years, a co-founder and co-creator of the product, I failed to realize the vision I had for my business. Why? It was not just one reason, but there were a few factors that occurred at key milestones in our company operations that really broke our company (figuratively and literally).

One mistake I made was not getting the right people aboard our company to help us from a business perspective. Involving business partners/advisors in a highly advanced technology business who have (very) limited understanding of software development and the target market is problematic. Or even worse, business partners/advisors that were successful in the software business with one type of product and applying the same principles to a product and market that is completely different is disastrous, as was the case in my company.

My business partners had backgrounds in “traditional” software business/marketing, i.e. put the product in the sales channel and build up a base of customers. On the surface this makes sense and were going with what they know. However, our product, using terminology from Geoffery Moore’s, "Crossing the Chasm”, was an innovative and disruptive product that required very early adopters. And from my perspective, once we had half dozen early adopters, the play was to sell our IP to a much larger player that could integrate our innovation into their product. In our case, one play was to sell directly to Microsoft and have the innovation built into BizTalk Server. Hard to believe no effort was to put into this when the company was operational. Another play was to sell to a much larger System Integrator, like Avanade, who perform large scale application integrations, to give them a competitive advantage. Yet another exit strategy was to take the innovation and apply it to another middleware or SOA product. Based on my latest research, it appears we still have a market advantage of being the only vendor to code generate “complete” application integration solutions from a design time tool output specification. Technically speaking, using Microsoft terminology, our design time tool is a graphical Domain Specific Language (DSL) and our code generation system is a Software Factory. Innovative and disruptive? Yes. Easily understood? No.

Lesson #1 Make sure your business partners and advisors fully understand your products marketplace and value proposition, especially if it is an innovative, disruptive product.

That was my vision and intent. However, dear fellow software developers looking to bring your product to market, I made one fatal mistake. I lost control of my company through share dilution. In order to acquire the people that could realize the vision I had, required giving up shares as, of course, there was little money to pay large salaries. Or at least so I thought. Once you lose your controlling interest in the company, well, you know, other people take advantage of this for their own self interest and the companies vision and product changes direction, and not necessarily for the better.

Lesson #2 Keep a controlling interest in your own software company at all costs.

Another mistake I made was not keeping the professional services division of the company running to bring in cash flow. It seems obvious enough that you cannot turn an SI into an ISV overnight. But this was lost on me and my business partners/advisors, who were pushing just to raise capital. We could have also acquired another professional services company that was similar to ours (Hi Mike and Jame!) to not only bring in additional pro services dollars, but also prime the pump for existing customers that may be interested in trying our innovation out. This would have reduced the dilution of our company before we even got started. Unfortunately, our advisory board and other business executives did not understand this and was an opportunity missed and may have been the tipping point looking back in hindsight.

Lesson #3 Find alternate methods of funding, particularly if you are an SI that already has a revenue channel.

Speaking of funding, if you need to raise funds, ensure that you can raise enough. Research shows that to fully capitalize a product as a SaaS requires $35 million to be raised over 5 to 7 years. Here in the Great White North, we were able to raise $5 million tops. If this were a car race, we did not even have a chance to qualify, let alone participate in the actual race.

Lesson #4 If you need to raise capital make sure you can raise enough.

Followed closely by:

Lesson #5 Ensure the people that are raising the money actually have a track record of raising that kind of money.

I know it sounds simple enough, but at the time… At the time there was lots of bravado and speculation, which on the bottom line means absolutely nothing.

The final lesson I have yet to figure out. Based on my market research, our company has a product innovation that does something no other product does in our market space of application integration. Market research shows that application integration is a top priority for 2007. What up? My guess is that we are yet another company that has fallen into the Chasm.

On a personal note, my hope is that in this short story provides a few tidbits of advice (and a little bit of hard earned wisdom) that other budding software development entrepreneurs will find useful, which is the reason I wrote this in the first place. Secondly, am I bitter? No, it was one of the most exciting and exhilarating business adventure that I have been on , Would I do it again, absolutely! Of course I would do it differently, no regrets.

For software developers wanting to unshackle themselves from the security blanket of the day job to develop their idea, vision, product. If you can’t stop thinking about it… Carpe diem baby!

Thursday, 28 June 2007

This is an open source software project that is highly experimental. In fact, it might be just plain crazy. Our industry is a buzz about Web 2.0, SOA, distributed computing, etc. Yet, what tools do we have to support distributed programming?

What I mean by distributed programming is the ability to program multiple remote computers (i.e. servers) from within one IDE instance, or as I call it, a DPE – Distributed Programming Environment:

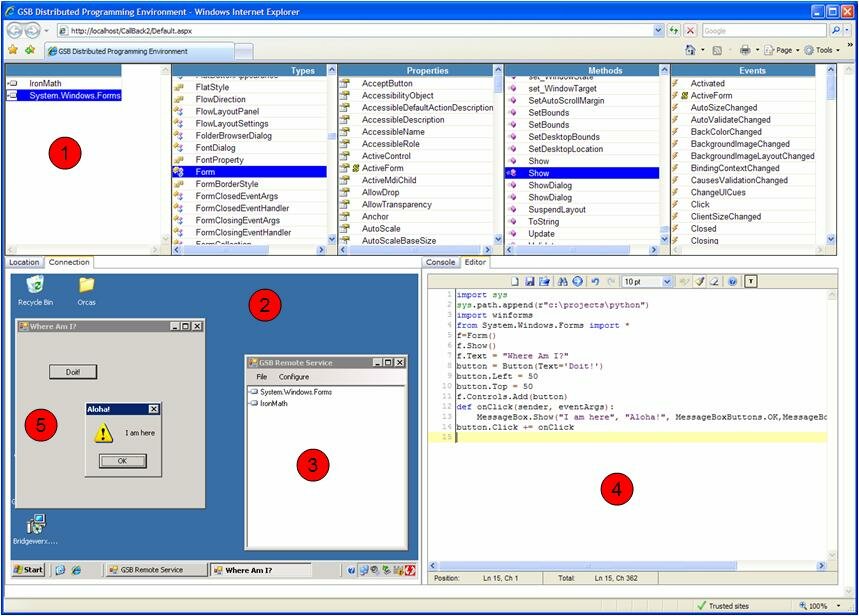

This ASP.NET AJAX DPE consists of the following parts labeled in red:

1) An Object or Class Bowser, similar to Lutz Roeder's outstanding Reflector, except mine is laid out in the old school Smalltalk System Brower format. Where are the assemblies IronMath and System.Windows.Forms coming from?

2) This is a Remote Desktop Connection (RDC) that allows connections to multiple remote computers. One tab per connection.

3) This is a Windows Forms application (or service if you will) that runs on the remote computer(s) that allows you to add assemblies to be reflected. It uses Windows Communication Foundation (WCF) to communicate with the web server hosting the DPE, which then displays the reflected assembly information in the browser window GridViews. In addition, this Windows Forms application hosts IronPython (and soon the DLR) which also communicates with the web server via WCF so that IronPython code can be executed on the remote server(s).

4) An IronPython source code editor which provides syntax highlighting, handling multiple source code files, etc. Again, using WCF to communicate with the IronPython DLL that is running on the remote server(s).

5) Is the result of running the IronPython code that is displayed in the editor window.

6) To find remote computers, I use Virtual Earth’s map control to display remote computer locations. Optionally, a MapPoint Web Service can be used to upload custom locations and attributes.

7) Oh yeah, an interactive command line console is a must have.

The context of which remote computer I am programming is activated by which tab has been selected. Meaning that when I click on any tab, any related context, like other dependant tabs and grids are switched too. I add distributed computers by adding tabs for each RDC session, a source code editor, and an interactive interpreter (i.e. console) per remote computer.

Think of the web server as a middle-tier to the WCF enabled remote services that host’s the code for reflecting assemblies and executing Python code.

As I mentioned earlier, I will be embedding the DLR which will add support for more dynamic languages. Also note that the web-based console window is generic in the sense that it can be a console to a remote cmd window or PowerShell window or… Same goes for the source code editor, its syntax highlighting also supports multiple languages.

Like I say, it will either be useful or just plain crazy. That’s why I call it Global System Builder

January 30, 2008 Update - Global System Builder is available for download at: http://www.codeplex.com/gsb/

Official web site: http://globalsystembuilder.com

Saturday, 23 June 2007

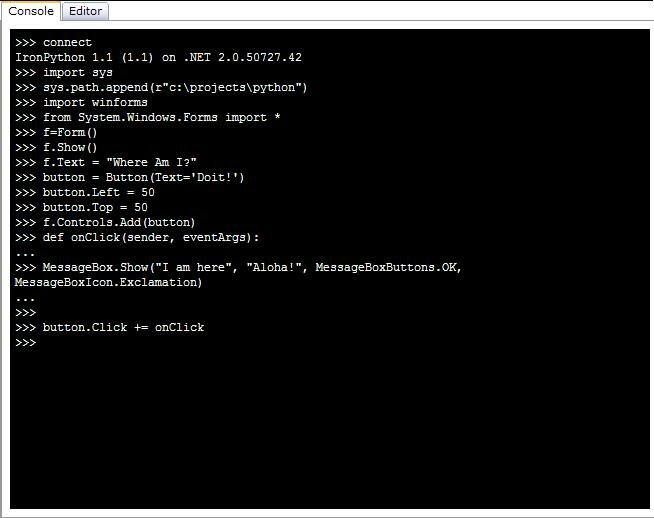

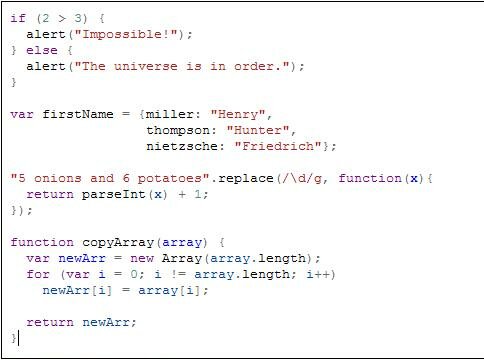

Previously, I discussed a web-based source code editor for IronPython, which is part of a larger application that I am working on. Most of the editor is complete, just working out the details on handling multiple source code files. Today we will drive IronPythons’ interactive interpreter, using a web-based console, which is also part of my application.

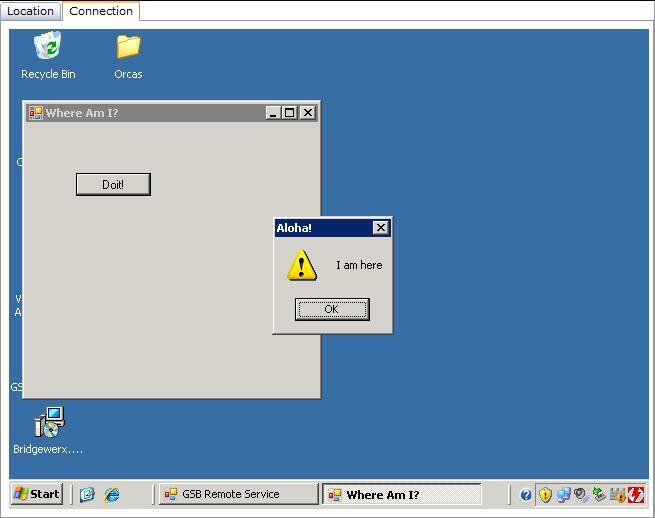

Using the console, we can whip up a simple Windows forms application in seconds:

And here is the output:

The console is based on two components, one is Sam Stephenson’s Prototype JavaScript framework and the console GUI is brought to you by Jeff Lindsay’s Joshua, which I modified to work with IronPython.

Running a web console over the internet presents a few challenges. Everywhere you read,

AJAX is the sh!t. I use a ton of it in the app I am building. A stands for Asynchronous and therefore, as some have stated, synchronous over HTTP is bad form mate. Well, in the case of a console application, I think a synchronous call is one (only?) way to make it “really” work:

//synchronous call to the server passing the IP string command and returning the response

function getFile(url, passData)

{

if (window.XMLHttpRequest)

{

AJAX = new XMLHttpRequest();

}

else

{

AJAX = new ActiveXObject("Microsoft.XMLHTTP");

}

if (AJAX)

{

AJAX.open("POST",url,false);

AJAX.setRequestHeader("Content-type", "text/xml");

AJAX.send(passData);

return AJAX.responseText;

}

else

{

return false;

}

}

One nit pick with the ASP.NET AJAX framework is that you can’t do synchronous. I would hope that the framework would support this option as a) other frameworks provide it and b) I want it man! The point of a framework is to provide options so that a variety of similar, but different business requirements can be met with “one” AJAX framework. I am already using 3 AJAX frameworks as each one brings a unique piece of functionality that I require. Who knows, maybe it will be more by the time I am finished.

If you look at the console closely, you will see one small GUI issue. When parsing interactive = true with Python, visually, the next line of Python code should reside beside the “…” and not on the next line beside the command prompt (i.e. >>>). Also note that the 4 spaces indent after the def statement does not work at the moment.

Aside from a couple of GUI issues, and a missing blinking cursor, there is one last feature to be implemented and then the console will be complete.

The astute reader may notice that the Windows application is running in a Terminal Services session, which is being hosted in my web browser. Did I remote into the web server? No. On the client computer? No. Where is the Windows application running?

January 30, 2008 Update - Global System Builder is available for download at: http://www.codeplex.com/gsb/

Official web site: http://globalsystembuilder.com

Friday, 15 June 2007

Why web-based? Well, the IronPython source code editor I am working on is one piece of a larger web-based, open source application that I am building and will become clear in future posts as to the reasons why it needs to be web based.

In the meantime, I thought I would write about some of the available components that provide real-time syntax highlighting and other features one might want in a web-based source code editor. After extensive research, there are 3 web-based source code editors that I would recommend.

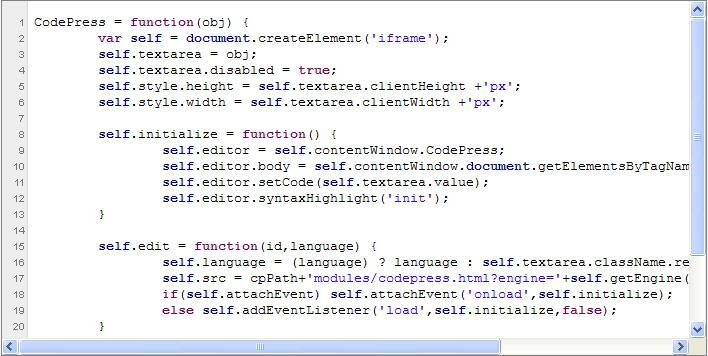

The screen shot above comes from a portion of my web-application that I am building. I am using Christophe Dolivet fantastic EditArea. EditArea is written in JavaScript and really is quite outstanding given the number of features it has, particularly that it is free (released under LGPL license).

One of my main requirements is that it can work with ASP.NET

AJAX (a free framework) and can be embedded in a TabControl as you see in the screen shot. I will discuss the web-based IronPython interactive console in another post.

I am really impressed with what Christophe has done, not only is EditArea fully featured, but the JavaScript source code is extremely well written using prototypes. Further, the documentation is excellent – you would think this is commercial based source code editor. EditArea supports multiple languages, both the software itself and the syntax highlighting it supports, for example, in my case English and Python.

Another real-time syntax highlighting source code editor I have come across is CodePress by Fernando M.A.d.S. It is also written in JavaScript and covered by LGPL. I really liked this editor as well. While it is not as full featured as EditArea, it does a very good job. It also supports many languages, but not Python at the moment. I see it is on the todo list. While I could get it to run in a bare bones ASP.NET AJAX application, I could not get it to run embedded in a tab control. However, I think the limitation may be my JavaScript coding skills (I am a C# programmer hooked on Intellisense) and not necessarily CodePress itself.

Syntax Highlighter by Marijn Haverbeke is another web based, real time syntax highlighter. It is released under a BSD like license. It works quite well, but not as fully featured as the other two editors. However, it is quite fast and seamless. Dos not support Python syntax highlighting, but as in the case of CodePress, would be fairly easy to implement. What I found really interesting was Marijn’s story on how it was designed. Fascinating Captain!

Thanks to Christophe, Fernando and Marijn for creating and developing these real-time syntax highlighting source code editors. It has saved me a lot of time not only from building these from scratch, but also proves a theory I have about software industrialization. That is, it has been done before and I don’t need to reinvent the wheel. Some people call this mashups, others reusable software or open source, or… No matter what you call it, I am thankful that so many people are willing to share their hard work.

When will it be released? Not sure as there still is lots of work to be done and can only work on it part time. I wish it was my full-time job!

January 30, 2008 Update - Global System Builder is available for download at: http://www.codeplex.com/gsb/

Official web site: http://globalsystembuilder.com

Saturday, 09 June 2007

Years ago I lived in a Smalltalk world and never knew how good I had it. As Larry O’Brien says, “Smalltalk has enough proponents so you're probably at least aware that it's browser and persistent workspace are life-altering (if you aren't, check out James Robertson's series of screencasts).

Unfortunately, in the Great White North, not too many Smalltalk jobs were available and in fact it really boiled down to programming Windows or Unix. And somehow I found myself in the Windows world using Visual Studio and Visual Basic. In some respects, VB was almost Smalltalk like – I loved the interpreter of stepping through code, finding a mistake, fixing it on the spot, setting next statement to run a few lines back and stepping through and keep on going. I found myself enjoying the rhythm of it and how productive I was. That was the thrill for me.

Then came C# which is a statically typed language, but what really got me was the rote of write code, compile it, fix compile errors, then compile it again, fix runtime errors, compile it again, step through, note the error, stop debugging, edit code, compile it again, etc. As you can imagine from the VB world, the most common error I got was, “are you missing a cast?” Also, my productivity just was nowhere near as fast.

A year or so ago, I came across IronPython. What I loved immediately was the interactive console that interpreted code on the fly. I type in my line of code and execute it with immediate results. For me, I work best like this. It allows me to experiment with CLR types and once I have it figured, I can then cut n paste into a code file and build up my application.

What is really exciting to me is that the DLR is intended to support a variety of dynamic languages, such as IronRuby by John Lam and others… yes, even Smalltalk. Way to go Peter Fisk!

© Copyright 2010 Mitch Barnett - Software Industrialization is the computerization of software design and function.

newtelligence dasBlog 2.2.8279.16125 Theme design by Bryan Bell

| Page rendered at Saturday, 07 August 2010 05:14:07 (Pacific Daylight Time, UTC-07:00)

|

On this page....

Search

Categories

Software Engineering Links

Sign In

|